Structure and Automate AI Workflows with MLOps

Giovanni Ciatto

Dipartimento di Informatica — Scienza e Ingegneria (DISI), Sede di Cesena,

Alma Mater Studiorum—Università di Bologna

(versione presentazione: 2025-10-22 )

Link a queste slide

Outline

-

Motivation and Context

- the ML workflow

- the GenAI workflow

- need for MLOps, definition, expected benefits

-

MLOps with MLflow

- API, tracking server, backend store, artifact store, setups

- interactive usage (notebook)

- batch usage + project setup

- interoperability with Python libraries

-

End-to-end example for classification

-

End-to-end example for LLM agents

What is the goal of a Machine Learning workflow?

Training a model from data, in order to:

- do prediction on unseen data,

- e.g. spam filter

- or mine information from it,

- e.g. profiling customers

- or automate some operation which is hard to code explicitly

- e.g. NPCs in video games

What is a model in the context of ML? (pt. 1)

In statistics (and machine learning) a model is a mathematical representation of a real-world process

(commonly attained by fitting a parametric function over a sample of data describing the process)

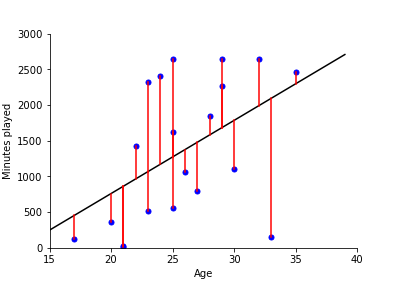

e.g.: $f(x) = \beta_0 + \beta_1 x $ where $f$ is the amount of minutes played, and $x$ is the age

What is a model in the context of ML? (pt. 2)

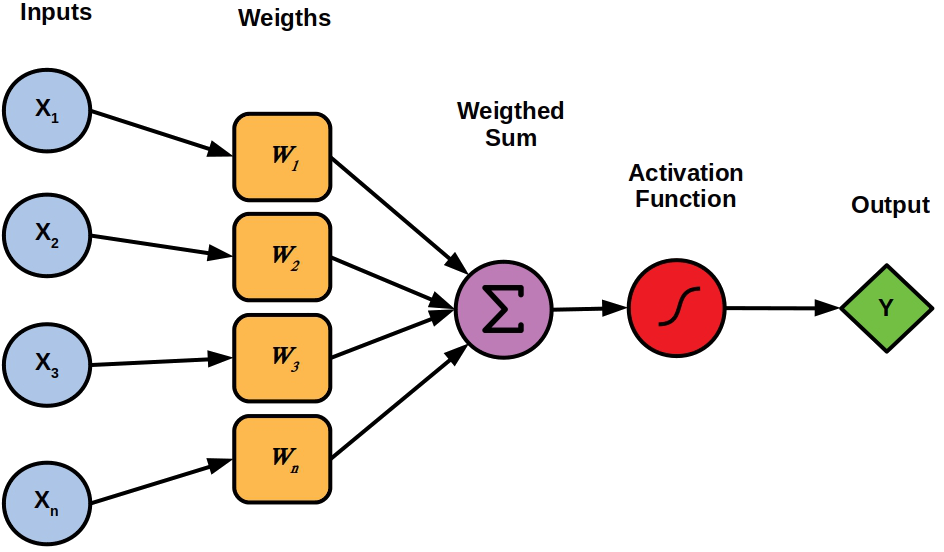

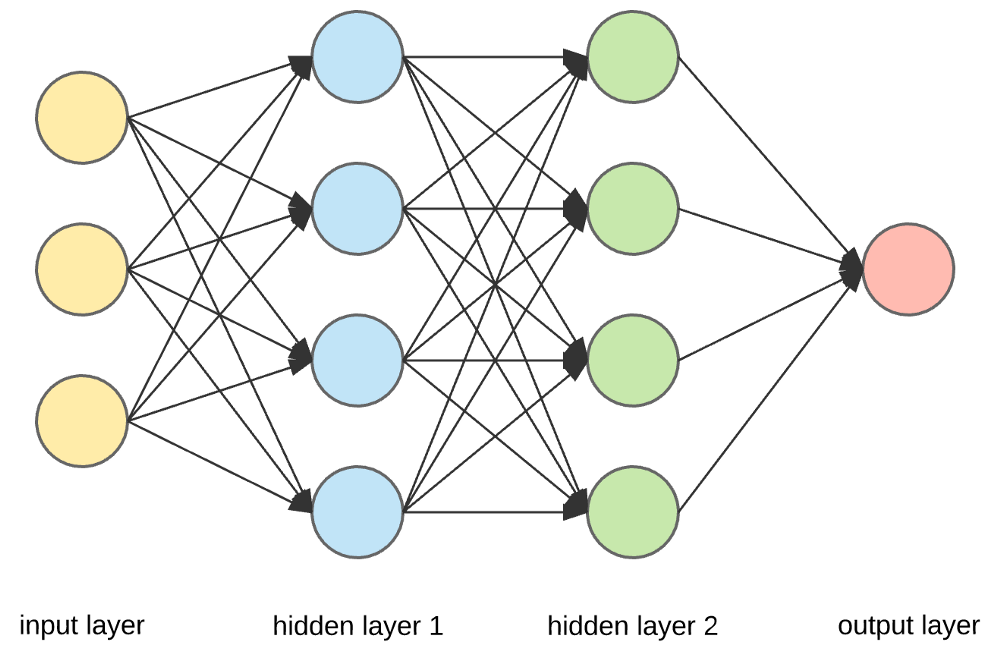

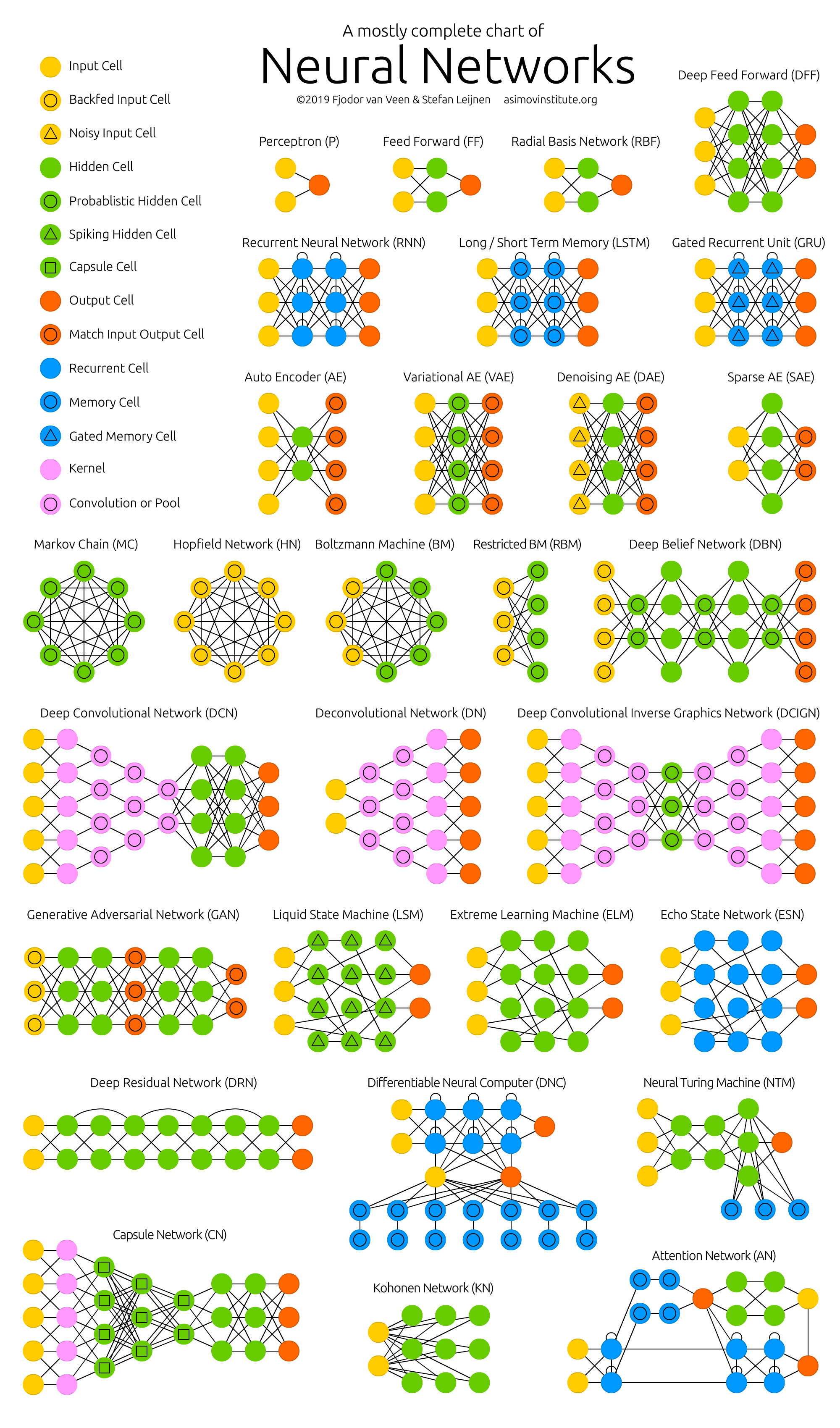

E.g. neural networks (NN) are a popular family of models

Single neuron

(Feed-forward)

Neural network $\equiv$ cascade of layers

Many admissible architectures, serving disparate purposes

What is the outcome of a Machine Learning workflow?

-

A software module (e.g. a Python object) implementing a mathematical function…

- e.g.

predict(input_data) -> output_data

- e.g.

-

… commonly tailored on a specific data schema

- e.g. customer information + statistics about shopping history

-

… which works sufficiently well w.r.t. test data

-

… which must commonly be integrated into a much larger software system

- e.g. a web application, a mobile app, etc.

-

… which may need to be re-trained upon data changes.

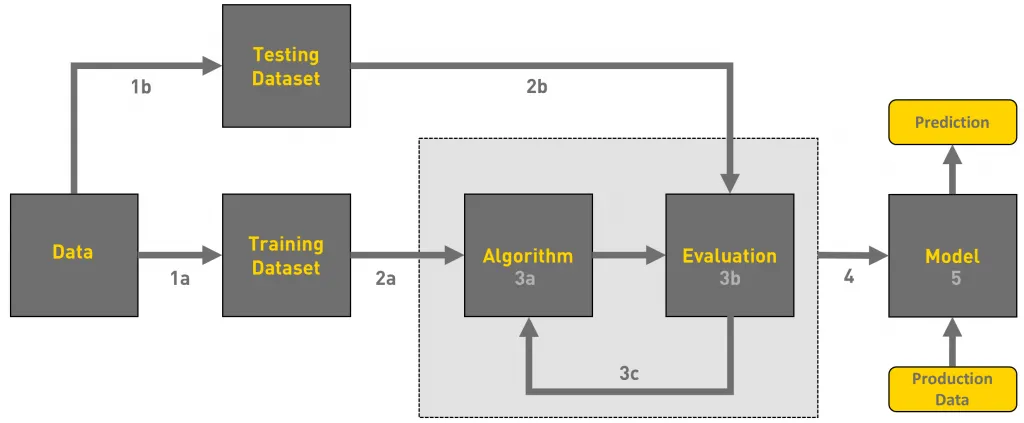

What are the phases of a Machine Learning workflow?

The process of producing a ML model is not linear nor simple:

- there could be many iterations (up to reaching satisfactory evaluation)

- the whole workflow may be re-started upon data changes

- updates in the model imply further integration/deployment efforts in downstream systems

Activities in a typical ML workflow

- Problem framing: define the business/technical goal

- Data collection: acquire raw data

- Data preparation: clean, label, and transform data

- Feature engineering: extract useful variables from data

- Model training: apply ML algorithms to produce candidate models

- Experimentation & evaluation: compare models, tune hyperparameters, measure performance

- Model packaging & deployment: turn the best model into a service or product

- Monitoring & feedback: check performance in production, detect drift, gather new data, trigger retraining

These steps are cyclical, not linear → one often revisits data, retrain, or refine features.

Example of ML workflow

Forecast footfall/visits to some office by day/time

- useful for staffing and opening hours planning

- Problem framing: model as a regression task or time-series forecasting task?

- Data collection: gather historical footfall data, calendar events, weather data, etc.

- Data preparation: clean and preprocess data, handle missing values, etc.

- Feature engineering: create relevant features (e.g. day of week, holidays, weather conditions)

- Model training: apply ML algorithms to produce candidate models

- Experimentation & evaluation: compare models, tune hyperparameters, measure performance

- Model packaging & deployment: turn the best model into a service or product

- Monitoring & feedback: monitor performance in production, detect drifts, gather new data, trigger retraining

- new offices or online services may change footfall patterns

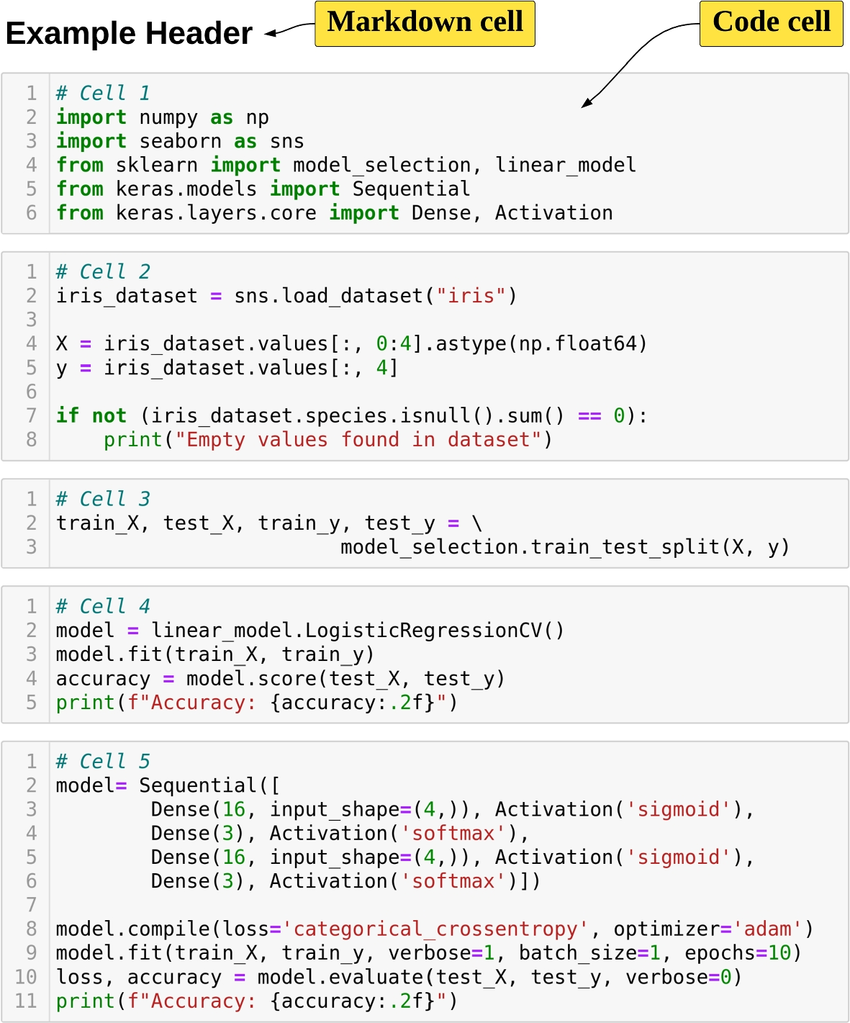

How are Machine Learning workflows typically performed?

Via Notebooks (e.g. Jupyter)

-

✅ Interleave code, textual description, and visualizations

-

✅ Interactive usage, allowing for real-time feedback and adjustments

-

✅ Uniform & easy interface to workstations

-

✅ Easy to save, restore, and share

-

❌ Incentivises manual activities over automatic ones

Pitfalls of manual work in notebooks

- Non-reproducibility: hidden state, out-of-order execution, forgotten seeds

- Weak provenance: params, code version, data slice, and metrics not logged

- Human-in-the-loop gating: “print accuracy → eyeball → tweak → rerun”

- Fragile artifacts: models overwritten, files named

final_v3.ipynb - Environment drift: “works on my machine” dependencies and data paths

- Collaboration pain: merge conflicts, opaque diffs, reviewability issues

Example: why manual runs mislead

- Run 1: random split → train → print accuracy = 0.82

- Tweak hyperparams → rerun only training cell → accuracy = 0.86

- Forgot to fix seed / re-run split → different data, different metric

- No record of params, code, data; “best” model cannot be justified

Consequences

- Incomparable results, irreproducible models

- Hard to automate, schedule, or roll back

- No trace from model → code → data → metrics

Comparison among ML and ordinary software projects

Analogies

- Both produce software modules in the end

- Both involve iterative processes, where feedback is used to improve the product

- Both are driven by tests/evaluations

- Both may benefit from automation

- … and may lose efficiency when activities are performed manually

Differences

- ML projects depend on data (which changes over time)

- Models need training and retraining, not just coding

- Performance may degrade in production (data drift, bias, new environments)

- Many different expertises are involved (data engineers, software engineers, domain experts, operations)

No structured process $\implies$ ML projects may fail to move from notebooks to real-world use

Machine Learning Operations (MLOps)

The practice of organizing and automating the end-to-end process of building, training, deploying, and maintaining machine-learning models

Expected benefits

- Reproducibility → the same code + same data always gives the same model

- Automation → repetitive steps (training, testing, deployment) are handled by pipelines

- Scalability → easier to scale up the training process to more data, bigger models, or more computing resources

- Monitoring & governance → models are tracked, evaluated, and kept under control

- Collaboration → teams work on shared infrastructure, with clear responsibilities

- Versioning → models, data, and code are versioned and traceable

How does MLOps support ML practitioners

MLOps adds infrastructure + processes + automation to make each step more reliable:

- Data → version control for datasets, metadata, lineage tracking

- Training → automated pipelines that reproduce experiments on demand

- Evaluation → systematic tracking of metrics, logs, and artifacts

- Deployment → continuous integration & delivery (CI/CD) for ML models, often with model registries

- Monitoring → automated checks for performance, drift, fairness, anomalies

- Collaboration → shared repositories, environments, and documentation so teams can work together

What may happen without MLOps

- Data in ad-hoc spreadsheets or local files (no version control)

- Training in personal notebooks (hard to reproduce later)

- Model evaluation is manual and undocumented (hard to compare results)

- Deployment = copy-paste code or manual sharing of a model file

- Monitoring is much harder → models silently degrade

- Collaboration =

“send me your notebook by email”

Consequences

- ❌ Fragile, non-reproducible workflows

- ❌ Long delays when models need updating

- ❌ Difficulty scaling beyond a single researcher

- ❌ Low trust from stakeholders (“why did accuracy drop?”)

What about Generative AI workflows?

What is the goal of a Generative AI workflow?

Engineering prompts, tools, vector stores, and agents to constrain and govern the behavior of pre-trained (foundation) models, in order to:

- generate contents (text, images, code, etc.) for a specific purpose

- e.g. bring unstructured data into a particular format

- e.g. produce summaries, reports, highlights

- interpret unstructured data and grasp information from it

- e.g. extract entities, relations, sentiments

- e.g. answer questions about a document

- automate data-processing tasks which are hard to code explicitly

- e.g. the task is ill-defined (

write an evaluation paragraph for each student's work) - e.g. the task requires mining information from unstructured data (

find the parties involved in this contract) - e.g. the task is complex yet too narrow to allow for general purpose coding (

plan a vacation itinerary based on user preferences)

- e.g. the task is ill-defined (

- interact with users via natural language

- e.g. chatbots, virtual assistants

Let’s explain the nomenclature

-

Pre-trained foundation models (PFM): large neural-networks trained on massive datasets to learn general skills (e.g. ‘understanding’ and generating text, images, code)

- e.g. GPT, PaLM, LLaMA, etc.

-

Prompts: carefully crafted textual inputs that guide some PFM to produce desired outputs

- prompt templates are prompts with named placeholders to be filled with specific data at runtime

- e.g.

Write a summary of the following article: {article_text}

- e.g.

- prompt templates are prompts with named placeholders to be filled with specific data at runtime

-

Tools: external software components (e.g. APIs, databases, search engines) that can be invoked by PFMs to perform specific tasks or retrieve information

- e.g. a calculator API, a weather API, a database query interface

-

Vector stores: specialized databases that store and retrieve high-dimensional vectors (embeddings) for the sake of information retrieval via similarity search

- e.g. to support retrieval-augmented generation (RAG)

-

Agents: software systems that orchestrate the interaction between PFMs and tools, enabling dynamic decision-making and task execution based on the context and user input

- e.g. a chatbot that uses a PFM for conversation and invokes a weather API when asked about the weather

- e.g. an assistant that uses a PFM to understand user requests and a database to fetch relevant information

What are the outcomes of a Generative AI workflow?

-

FM are commonly not produced in-house, but rather accessed via APIs… yet the choice of what model(s) to use is crucial

- must be available, configured, and most commonly imply costs (per call, per token, etc.)

-

A set of prompt templates (text files, or code snippets) that are known to work well for the tasks at hand

- commonly assessed via semi-automatic evaluations on a validation set of inputs

-

A set of tool servers implementing the MCP protocol so that tools can be invoked by PFMs

- these are software modules, somewhat similar to ordinary Web services, offering one endpoint per tool

-

A set of agents, implementing the logic to orchestrate the interaction between PFMs and tools

- these are software modules, commonly implemented via libraries such as LangChain or LlamaIndex

-

A set of vector stores (if needed), populated with relevant data, and accessible by the agents

- there are software modules, somewhat similar to ordinary DBMS, offering CRUD operations on data chunks indexed by their embeddings

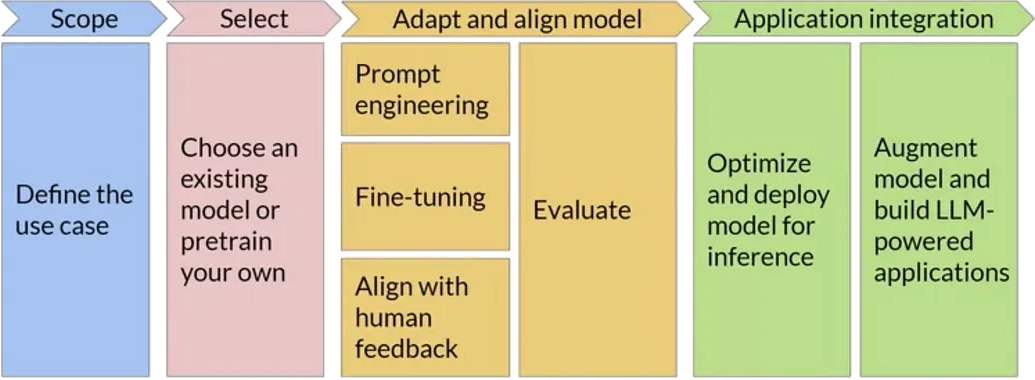

What are the phases of a GenAI workflow?

(Similar to the ML workflow in the sense that the goal is to process data, but different in many details e.g. no training is involved)

- there could be many iterations (e.g. for PFM selection, and prompt tuning)

- the whole workflow may be re-started upon data changes, or task changes, or new PFM availability

- the interplay between prompts, models, tasks, and data may need to be monitored and adjusted continuously

- the data-flow between components (agents, PFM, tools, vector stores) may need to be tracked for the sake of debugging and monitoring

Peculiar activities in a typical GenAI workflow

-

Foundation model selection: choose the most suitable pre-trained model(s) based on task requirements, performance, cost, data protection, and availability

- implies trying out prompts (even manually) on different models

-

Prompt engineering: design, test, and refine prompt templates to elicit the desired responses

- implies engineering variables, lengths, formats, contents, etc

-

Evaluations: establish assertions and metrics to assess PFM responses to prompts (attained by instantiating templates over actual data)

- somewhat similar to unit tests in ordinary software

- important when automatic, as they allow quick evaluations on prompt/model combinations

-

Tracking the data-flow between components (agents, PFM, tools, vector stores) to monitor costs, latency, and to debug unexpected behaviors

- also useful for the sake of auditing and governance

Example of GenAI workflow (pt. 1)

Support public officers in managing tenders through a GenAI assistant that understands and compares procurement decisions transparently.

-

Problem Framing:

- Content Generation: draft and justify comparisons among suppliers’ offers vs. technical specs

- Interpretation: understand regulatory documents and technical language

- Automation: retrieve relevant laws, norms, and prior tender examples

- Interaction: enable officers to query and validate results through natural language

-

Data Collection: past tenders’ technical specifications, acts, etc; regulatory documents, etc.

-

Data Preparation:

- devise useful data schema & extract relevant data from documents

- anonymize sensitive info (suppliers, personal data)

- segment documents and index by topic (law, SLA, price table, etc.)

Example of GenAI workflow (pt. 2)

-

Prompt Engineering:

- design prompt templates for comparison, justification, and Q&A

- use role-based system prompts (

You are a procurement evaluator…)

- use role-based system prompts (

- allocate placeholders for RAG-retrieved data chunks

- iterate on template design based on manual tests

- design prompt templates for comparison, justification, and Q&A

-

Foundation Model Selection: multi-lingual? specialized in legal/technical text? cost constraints? support for tools?

-

Vector stores: storing embeddings for tender documents & specs, legal texts & guidelines, previous evaluation, templates

- choose embedding model, chunking strategy, and populate vector store

- engineer retrieval strategies to fetch relevant chunks

-

Tools:

- regulation lookup API + tender database query API

- report generation out of document templates

- automate scoring calculations via spreadsheet or Python scripts generation

-

Agents:

- exploit LLM to extract structured check-lists out of technical specs

- orchestrate RAG, tool invocations, and prompt templates to score each offer

- generate comparison reports

- …

LLM Operations (LLMOps)

The practice of organizing and automating the end-to-end process of building, evaluating, deploying, and maintaining GenAI applications

In a nutshell: MLOps for GenAI

Expected benefits

- Systematicity → structured processes to manage prompts, tools, and agents

- Efficiency → reuse of components, templates, and evaluations

- Scalability → easier to test, and update individual components (prompt templates, tools, agents)

- Monitoring & governance → components are tracked, evaluated, and kept under control

How does LLMOps support GenAI practitioners

LLOps adds infrastructure + processes + automation to make each step more reliable:

- Foundation models → catalogs of available models, with metadata on capabilities, costs, and usage policies

- Provider Gateways → standardized APIs to access different PFM providers (e.g. OpenAI, HuggingFace) uniformly, without code rewrites

- Prompt engineering → version control for prompt templates, systematic testing frameworks

- Tool integration → standardized protocols (e.g. MCP) and libraries to connect tools with PFMs + gateway technologies to aggregate multiple tools

- Agents → provider-agnostic libraries and frameworks (e.g. LangChain) to build, manage, and orchestrate agents

- Vector stores → standardized interfaces to store and retrieve data chunks via embeddings, with support for multiple backend DBMS

- Evaluation & monitoring → automated frameworks to run evaluations, track performance, and monitor costs

What may happen without LLMOps

-

Foundation models are hard-coded in the application

- making it difficult to switch providers or models

-

Prompt templates are scattered in code or documents

- making it hard to track changes or reuse them

-

Tools are manually integrated, leading to:

- brittle connections,

- lack of observability,

- maintenance challenges

-

Agents are ad-hoc scripts that mix logic, PFM calls, and tool invocations

- making them hard to debug, extend or compose

-

Vector stores are tightly coupled with specific DBMS

- making it hard to migrate or scale

-

Evaluation & monitoring are manual and sporadic leading to undetected issues, cost overruns, and loss of trust

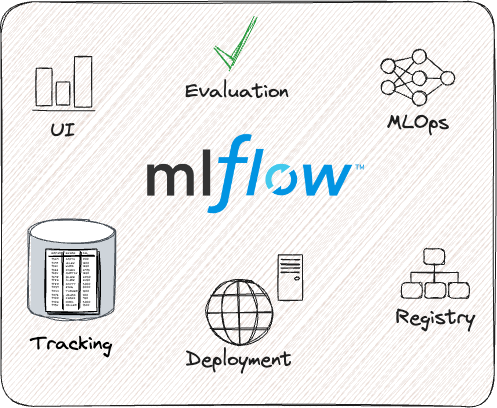

MLOps and LLMOps with MLflow

What is MLflow? https://mlflow.org/

An open-source Python framework for MLOps and (most recently) LLMOps

- usable either in-cloud (e.g. via Databricks) or on-premises (self-hosted)

- we’ll see the latter setup

Outline

- First, we focus on how to use MLflow for the sake of MLOps

- Then, we show how MLflow can be used for LLMOps as well

MLflow for MLOps: main components

Talk is Over

Compiled on: 2025-10-22 — printable version